The Benefits of Using Automatic Speaker Recognition in LEAs’ Investigative Processes

When Phonexia introduced the world to the first commercially available voice biometrics engine powered entirely by Deep Neural Networks (DNNs) in 2018, it represented a ground-breaking milestone for the world of automatic speaker recognition technologies. Ever since then, the DNN-based approach has become the new normal of cutting-edge speaker recognition technologies that automate the recognition of a person’s identity with voice biometrics. Such systems are trained to extract the unique properties of a person’s voice automatically from an audio recording and return a statistical probability that the speaker’s voice is the same as the voice extracted from a different audio recording. The speed and automatic nature of speaker recognition systems enables law enforcement agencies from all around the world to gather all necessary insights quickly and progress with the investigation of organized crime of any scale with high efficiency.

The reliability and usefulness of automatic speaker recognition systems rise and fall with the quality of audio recordings. It is important to provide these systems with the recordings of similar audio quality as was the quality of audio data used for training them. Hence, it is important to always keep in mind the variables that may interfere with the provided results, such as high background noise, a different audio channel, or too much echo in the recordings. Nevertheless, speaker recognition systems are very sophisticated these days and can cope with audio data discrepancies to a certain level (e.g., enabling a cross-channel speaker recognition or background noise reduction). The extraction of a person’s unique voice characteristics from a legally obtained audio recording is done via a so-called voiceprint—

a mathematical expression of the person’s unique voice properties saved into a file. The voiceprint file size can be as little as 3 kB depending on the vendor and technology used. As voiceprints are not the recordings of a person’s voice, they cannot be used to reconstruct a person’s voice or the words that were said in a given audio recording.

This makes voiceprints very safe for handling and sharing among different law enforcement units for effective investigation. The very small size of voiceprints makes them easy to compare with each other. Top-class automatic speaker recognition systems can compare hundreds of thousands of voiceprints in less than one second. Whenever any two voiceprints are compared against each other, a Log-Likelihood Ratio (LLR) score is generated by the system to determine whether these voiceprints belong to the same speaker or not. A high LLR score indicates that the speaker recognition system is very sure that the two voiceprints are from the same speaker. A low LLR score indicates that the system is very sure that both voiceprints are from different speakers. These scores can then be used for further analysis of the audio recordings or the presentation of findings to court (e.g., by using the Probability Density Function as a graphical representation of the results).

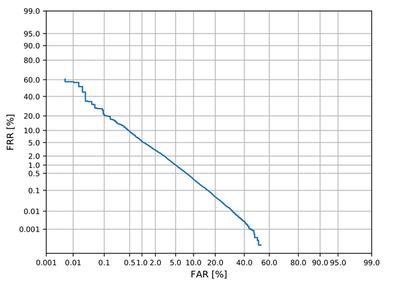

The accuracy of speaker recognition technologies is typically described with the Detection Error Tradeoff (DET). It is calculated on a set of evaluation voiceprint pairs, each having a corresponding “match” or “do not match” label. These voiceprint pairs are sent to the voiceprint comparison module, and the scores are compared with a threshold. There can be two kinds of errors:

- False Acceptance Rate (FAR)—Indicates the percentage of cases in which the system incorrectly recognizes different people's voiceprints as an identical speaker.

- False Rejection Rate (FRR)—Indicates the percentage of cases in which the system incorrectly recognizes the same person's voiceprints as different speakers.

For one threshold, we get two values. If we change the threshold, we can plot the whole curve. Here is an example of a curve calculated based on the National Institute of Standards and Technology Speaker Recognition Evaluation (NIST SRE) 2016 data set:

The closer the curve is to point [0,0], the better the speaker recognition system is. The DET curve point at which the FA rate and the FR rate are equal is called the Equal Error Rate (EER). The speaker recognition system’s accuracy also depends on the amount of speech evidence given by the speech length used during a voiceprint’s creation.

The main benefit of speaker recognition systems is the automation of data processing, which saves a significant amount of time that would be otherwise spent on individual analysis.

Traditional manually performed forensic analyses are language-dependent and time-consuming, while automatic speaker recognition systems are fast and language-independent (nevertheless, the role of a forensic expert is still irreplaceable for the presentation of the results). There is also other voice biometric information that can be automatically extracted from a person’s voice, such as the gender of a speaker. The bottom line is that current automatic speaker recognition systems, such as the one currently built by the ROXANNE consortium, can greatly improve the analytical work efficiency, enabling law enforcement agencies of any size to investigate faster.